Editor’s Note: Due to the length of this piece, you may need to click a button to read the whole thing in your email. Sorry!

On Friday, Sam Altman was unceremoniously fired as CEO of OpenAI, with the board citing that he was “not consistently candid with his communications…hindering [the board’s] ability to exercise its responsibilities.” Greg Brockman, then-President and board member, would later resign from OpenAI, claiming that neither he nor Sam were given much of a heads up, and that fellow co-founder and board member Ilya Sutskever had fired Sam via Google Meet, soon after which Brockman was told (again, via Google Meet) that he would be removed from the board and that Sam would be fired.

OpenAI, a company currently working on financing that would make it worth $86 billion, fired two of its most prominent leaders via the second-worst teleworking software, blindsiding the world, including Microsoft, who found out a minute before everybody else despite having invested $13 billion in the company.

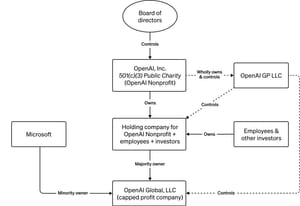

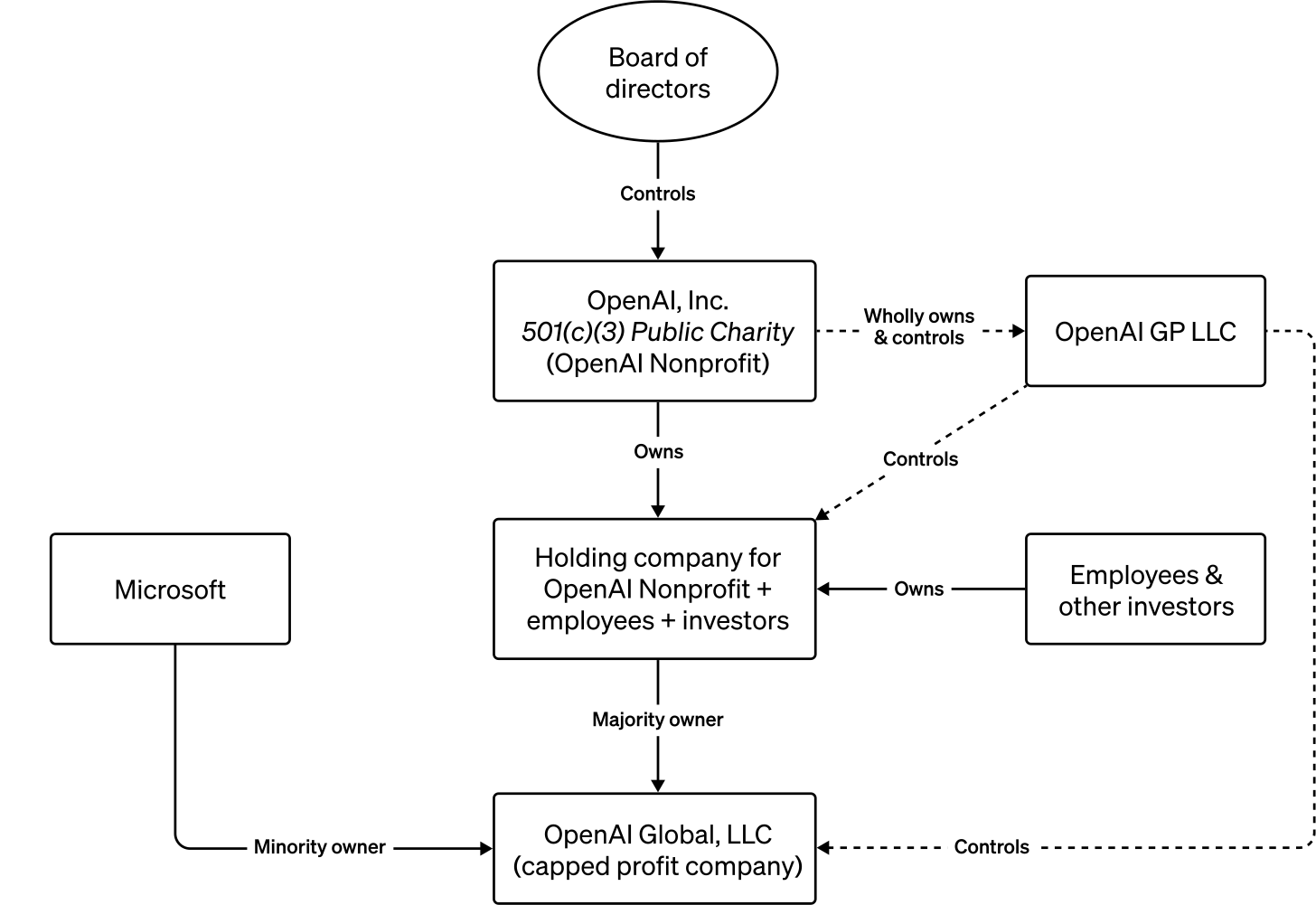

At this point, it’s important to learn a little bit about how weirdly OpenAI is structured as a company.

To explain, OpenAI as you know it is actually three companies, and its mission is, and I quote, to “create a safe AGI that is broadly beneficial,” which can refer to everything from a totally sentient artificial intelligence to, per Sam Altman, the “equivalent of a median human that you could hire as a co-worker.”

OpenAI, Inc. is a 501(c)(3) Public Charity — a non-profit that owns and controls the managing entity, OpenAI GP LLC, which controls the holding company for the OpenAI nonprofit entity, which is the majority owner of OpenAI Global, LLC, which is the “capped” for-profit entity. It is the bizarre result of OpenAI’s 2019 move to a “capped” for-profit model — though one shouldn’t give them any credit, as investors are capped at one hundred times their initial investment.

While this may seem confusing, the ultimate result is that OpenAI is controlled at its heart not by its investors, but by a board of directors that doesn’t hold any equity. To quote ArsTechnica:

OpenAI has an unusual structure where its for-profit arm is owned and controlled by a non-profit 501(c)(3) public charity. Prior to yesterday, that non-profit was controlled by a board of directors that included Altman, Brockman, Ilya Sutskever and three others who were not OpenAI employees: Adam D’Angelo, the CEO of Quora; Tasha McCauley, an adjunct senior management scientist at RAND corporation; and Helen Toner, director of strategy and foundational research grants at Georgetown’s Center for Security and Emerging Technology. Now, only Sutskever, D'Angelo, McCauley, and Toner remain.

It’s a deeply peculiar situation where a board almost entirely made up of people who don’t work at a company have ousted its CEO and President from both the company and the board itself. While some are comparing it to Steve Jobs’ ousting from Apple, that was a decision made by several profit-hungry business magnates, as opposed to OpenAI’s gaggle of research scientists, most notable of them Ilya Sutskever, who co-founded the company and, according to ArsTechnica, led the coup against Altman due to “concerns about the safety and speed of OpenAI’s tech deployment.”

Regardless of the why, it’s simply remarkable that this took place in the manner it did. Sam Altman was (is?) one of the most notable executives in the tech industry, called the “Oppenheimer of Our Age” by New York Magazine, and was booted out without compensation by a bunch of scientists and the CEO of the slightly better version of Yahoo! Answers. He had no equity in the company (other than a small investment through YCombinator), and while he likely was paid for his role, he lacked any of the usual corporate and legal machinery that has protected CEOs like Mark Zuckerberg from being fired.

Sidenote: Currently, some in the valley are complaining about OpenAI’s board being “inexperienced,” as if tech’s boards of directors have traditionally done a good job. The same board that fired Steve Jobs was filled with prominent venture capitalists and executives, as was the board of WeWork, Theranos (which included military leaders and former secretaries of state), and Juicero. Jawbone, a company that went from a $3bn valuation to bankruptcy, had industry figureheads like Ben Horowitz, and doomed entertainment startup Quibi had the CEO of Condé Nast and the founding partner of top entertainment law firm Ziffren Brittenham LLP. In fact, I’d argue that the boards of major tech firms have overwhelmingly failed to police their companies, rarely, if ever, taking action against executives behaving badly.

According to an internal memo from OpenAI’s Chief Operating Officer Brad Lightcap, Altman’s firing was not a result of “malfeasance or anything related to our financial, business, safety, or security/privacy practices,” but due to the aforementioned communication issues between Altman and the board. While I don’t buy that explanation for a second, it suggests that there was something Altman did in the last two weeks that fundamentally turned the board against him.

More bizarrely, Lightcap added that he and the rest of OpenAI’s management team were still having “multiple conversations with the board to try to better understand the reasons and process behind the decisions.” In plain English, a C-level executive of a company still does not exactly understand why the CEO of his company was fired, despite multiple discussions with the board.

A company likely worth nearly $100 billion that’s partly-owned by a $2.75 trillion tech company has been decapitated by a group of scientists potentially based on concerns over “AI safety.” As Semafor’s Louise Matsakis put it:

If true, I think this will completely radicalize people working on AI one way or the other. Either you say, "wow, this threat was so extreme that they got rid of Altman," or "these people are so crazy in their beliefs that they got rid of Altman."

In the event that the “AI safety” story is true, this is likely a watershed moment in the tech industry. Altman was OpenAI’s face and voice, a relentless marketer and fundraiser, ever-espousing how much ChatGPT could do while adding how dangerous it could become. At the APEC conference a day before his firing, Altman touted that ChatGPT would take a leap forward that “no one expected,” just over a week after OpenAI previewed GPT-4 Turbo, along with a tool to create AI models without being able to code and a partnership where OpenAI would directly solicit private datasets to train its own models. Altman was clearly gunning for scale, and to turn OpenAI into the name synonymous with “AI,” and to be at the helm of the only current company I would expect to grow to the scale of a Google or Meta.

And, perhaps, that’s what the board feared.

The New Gold Rush

The growth-at-all-costs Rot Economy model that the startup world follows is absolutely antithetical to creating a company that truly “benefits humanity.” Artificial intelligence has become one of the few reliable roads to capital in the current economy, with venture capitalists sinking more than a quarter of their startup dollars into AI-related companies in 2023. While one can only guess at what Altman wanted to do, it’s easy to assume he wanted to do it fast, shoving increasingly “powerful” tech into the hands of as many people as a means of making OpenAI and ChatGPT grow larger and more ubiquitous — regardless of the fact that ChatGPT4 was a marked downgrade in reliability.

OpenAI was worth $14 billion in 2021, and grew to $29 billion in 2023, and as I previously noted, OpenAI has been trying to raise a round to value it at $86 billion. ChatGPT is estimated to cost hundreds of thousands of dollars a day to run, and the massive push to grow both the complexity and scale of the operation is deeply unsustainable. OpenAI is allegedly on track to generate $1 billion a year in revenue in the next year, but if it’s not profitable at this scale, it’s likely that Altman’s mission has been to make the company more money rather than develop an AGI or, perish the thought, make a sustainable company that doesn’t have to continually live on venture-backed welfare.

OpenAI was already in a crisis before Altman was fired. As Alex Kantrowitz noted a few weeks ago, its position as a for-profit entity is deeply rickety, with its core business proposition under threat from other companies undercutting them with more-affordable access to — or customizability within — competing Large Language Models. While we will find out exactly what happens next in the following days or weeks, it’s likely that Altman wanted OpenAI to go in a different direction to the board — one that allowed it to continue to scale in an unsustainable way, or that would work against the non-profit’s mission.

There are, of course, more depressing possible reasons. Annie Altman, Sam’s sister, has made multiple allegations against Sam and his brother Jack, as well as framing him as a manipulative and cold individual. The removal of such a high-profile CEO from such a high-profile company is relatively unheard of, especially without a clear reason or a public inflection point. Up until a day before the firing, Altman was still being hailed as a technolojesus that would change the world forever. While the board has stated that there wasn’t any malfeasance or financial problems, and that this was simply a communication issue, it’s incredibly hard to tell what said communication problems could rise to such a sudden end to Altman’s tenure.

There may be some clues. According to Bloomberg, the board had already been sparring with Altman over him courting Middle Eastern sovereign wealth funds to create an AI chip startup, as well as Softbank’s Masayoshi Son, a man who loves to burn money. Furthermore, Altman had allegedly been trying to raise these funds off of OpenAI’s name, and the board feared that he wouldn’t follow their governance models in his other companies.

What we’re going to see as a result is a dramatic fight between the Rot Economists of the Valley and those who believe that the technology industry should build things that people actually want. Marc Andreessen — who has already invested billions in artificial intelligence companies — has complained that copyright payments for AI training data are “detrimental to [Andreessen Horowitz’s] investments,” and that it would “significantly disrupt” the continued development of artificial intelligence. Brian Armstrong, CEO of Coinbase, is blaming Altman’s firing on “decels” and “effective altruists” that have “destroyed a shining star of American capitalism,” decrying forces like “woke non-profit boards” and “nonsensical regulations” while claiming that effective altruism (rather than a $10bn fraud) “destroyed lots of value in crypto.”

The effective altruism angle is weirder still when you consider that just one of the board members who voted to oust Sam Altman — Tasha McCauley — has any links to the effective altruism movement. McCauley, in addition to her work as CEO of computer vision startup Fellow Robots, is a trustee of the UK branch of EA charity Effective Ventures, which, incidentally, is currently being investigated by the UK Charity Commission following the implosion of FTX. From what I can tell, no other member subscribes to the effective altruism ideology, or has any meaningful links to the movement.

Armstrong also added that it was “probably a mistake” that OpenAI didn’t exist to make money or maximize shareholder value — something I’d argue is closer to the heart of the issue than anyone currently realizes.

I believe that OpenAI is the beginning of a reckoning in Silicon Valley, where the venture community is forced to consider that the only thing that grows forever is cancer. There will be some that see this as an opportunity to slow down — to build sustainably, profitable, and in a way that makes companies last for decades or centuries, and others that see Altman’s firing as a gun to the head of their growth-at-all-costs money machine. They fear “deccelerationists” that will take a fire extinguisher to their perpetually-burning piles of money, because that fire is what keeps them warm and brings them attention, even if the human and capital costs are continually painful.

In many ways, Altman is symbolic of that kind of Valley — a former founder of a failed startup that he nevertheless was able to sell, later becoming a partner at Silicon Valley darling Y Combinator and a prolific investor. He’s wealthy, white, the right side of 40, an anti-remote executive who believes that people are “much smarter and savvier than a lot of the so-called experts think,” and believes that “colleges prioritize making people safe over everything else and produced a generation afraid to fail…and thus [said generation] is on pace to accomplish extremely little.”

Altman is the martyr of the “effective accelerationist” movement that has turned the growth-at-all-costs model into a religion, one led by Marc Andreessen, who notably believes that risk management is one of the tech industry’s enemies. This movement is spearheaded by extremely wealthy men who regard any force that stops any startup from growing its revenue as a prime evil, and it’s one that is currently mourning Altman’s firing as if he’d died suddenly of unknown causes.

The aftermath of this situation will be messy, likely involving lawsuits, investor and employee revolts, and an internal fracturing within OpenAI. Unsurprisingly, Altman and Brockman quickly found new roles, joining Microsoft to “lead a new advanced AI research team.” Given the rumors of Altman’s desire to found a competing artificial intelligence company, it’s unclear whether this will be a long-term move for Altman, or merely a temporary career detour.

After firing Altman, OpenAI’s board installed former CTO Mira Murati as a caretaker CEO. Her brief (Liz Truss brief) tenure ended late on Sunday night with the appointment of Emmett Shear — the former CEO of Amazon-owned live streaming site Twitch, and the co-founder of its predecessor, Justin.tv. In 2011, Shear began serving as a part-time partner to Y Combinator. It’s unclear what this role entailed, besides advising new portfolio companies, or how long it lasted.

Altman will be fine. OpenAI, however, is an entirely different question.

Artificial Scarcity

A master marketer, Altman has inextricably tied his name and personal brand into that of OpenAI, just like Steve Jobs did to Apple upon his return in 1997. Perceptions are everything, and many — most importantly, OpenAI’s biggest investors — attribute OpenAI’s success to the stewardship of its now-booted CEO. With AI development an incredibly costly business, OpenAI can’t afford a tightening of the capital floodgates.

Nor can it afford to lose its most talented AI engineers. Their skills are a scarce commodity in Silicon Valley, and they could — very easily and very quickly — find alternative employment elsewhere. In the wake of Altman’s shock firing, several OpenAI researchers and engineers resigned (or threatened to resign) unless the board reinstated him. Now that it’s clear he isn’t coming back, will they return to their posts, or will they follow Altman to Microsoft, or join another company? Again, it’s unclear.

On the balance of probabilities, the odds of a major employee exodus seems high, if not inevitable. On Friday, OpenAI employees began tweeting the heart emoji — a shibboleth purportedly meant to indicate one’s intention to leave with Altman. On Monday morning, when it became clear Altman wouldn’t be returning, many employees — including the vanishingly short-lived Murati — tweeted “OpenAI is nothing without its people” verbatim, to which Altman responded with a heart emoji. Again, this is likely a code intended to signal one’s intent to leave.

And it’s a threat they’re seemingly prepared to follow-through with. Of OpenAI’s 700 employees, roughly 500 have signed an open letter to the board demanding their resignation, the appointment of two new independent board members, and the return of Altman and Brockman, or they “may choose to resign from OpenAI and join the newly announced Microsoft subsidiary run by Sam Altman and Greg Brockman.” The letter also claims that Microsoft has assured the OpenAI employees that positions exist for them within the new unit.

A worker revolt notwithstanding, it’s unclear how OpenAI will continue to survive under Shear’s stewardship.

Shear doesn’t have much of an AI background, and has never faced the regulatory and legal challenges that threaten OpenAI and the wider AI sector. AI is — for obvious reasons — a heavily-scrutinized industry. Going from CEO of a site where people stream games to one that makes a product with the potential to remake entire industries is akin to a McDonald’s fry cook becoming head chef at a Michelin-starred restaurant.

Will OpenAI continue to grow, or will it slow down to make the company sustainable? Neither Twitch nor its predecessor Justin.tv have ever been profitable, with the former surviving primarily on handouts from its parent company, Amazon. Will Microsoft continue to back OpenAI, or will it see that the company is serious about its non-profit mission? Or, with Altman and Brockman now leading an advanced AI division, will it treat OpenAI not as a partner, but as a competitor?

The latter scenario feels especially plausible. After all, Microsoft has only wired “a fraction” of its $10 billion investment (of which cloud compute credits make up a significant portion) according to Semafor, which also added that CEO Satya Nadella feels that the board “mishandled Altman’s firing,” “destabilizing” the company as a result. It isn’t unthinkable that Microsoft could cut its losses and walk away.

Companies like Microsoft can — and sometimes do — shrug off billion dollar losses as though they’re minor inconveniences. They’re painful, sure, though hardly present an existential threat. It wrote off $7.6bn in 2015 after its failed acquisition of Nokia, and undoubtedly lost even more from Windows Mobile as a whole.

And that’s assuming we’re even considering this episode as a loss for Microsoft, which (assuming a mass walk-out occurs) has obtained the talent and leadership behind OpenAI without the cost and scrutiny of a full acquisition. In this scenario, it would have acquired everything besides the IP — which, presumably, it could buy at a later date, with its opponent in the negotiations both vastly-diminished and desperate.

For those self-described “accelerationists,” Shear is undoubtedly their least-preferred choice for CEO. His stated objectives for the first thirty days involve conducting an investigation into the circumstances of Altman’s departure, speaking to OpenAI’s partners, investors, and employees, and reforming the company’s management and leadership team. Shear has also said that, depending on the results of his investigation, he may reform the company’s governance structure — although provided little detail as to what that would entail.

Where’s Your Ed At is a free newsletter, but if you like my work and want to kick me a few dollars, you can do so here.

Like reading Where’s Your Ed At? Perhaps you’d like to join There’s Your Ed At, our Discord Chat Room? You can find it at chat.wheresyoured.at or at this link. It’s free, and a great place to talk with other readers (and me, of course).

And don’t forget to listen to 15 Minutes In Hell, The Where’s Your Ed At Podcast. You can find it on Apple Podcasts, Spotify, or find the RSS link here.

The Case For Slowing Down

Shear has also expressed his support for a pause or slowdown on AI development, articulated his contempt for tech accelerationists who are willing to risk an AI-induced catastrophe in the name of growth, and described the the belief that AI shouldn’t be regulated for the purpose of safety as “total insanity and reckless disregard for humanity's future.” His views stand in stark contrast to those of Andreessen and Armstrong, and the countless other Rot Economists in the Valley.

And, even at this late stage, there remains a possibility that Altman may return, with The Verge’s Nilay Patel and Alex Heath saying their move to Microsoft is “not a done deal,” noting the pressure being heaped upon the OpenAI board.

It seems some of the board members responsible for his ouster have, in the face of massive backlash, developed cold feet, with Sutskever expressing his “[deep] regret [for his] participation in the board’s actions” and his intent to “do everything [he] can to reunite the company.” Sutskever is, incidentally, the twelfth signatory on the open letter demanding Altman’s reinstatement and the board’s — or, rather, his — resignation. Shear’s tenure could be just as short-lived as Murati’s.

In the end, OpenAI may end up being the most important company in Silicon Valley history, not just for the technology it builds, but for the controversies it’s going to create — and, evidently, has already spawned. Even though fears about an Artificial General Intelligence (AGI) may be hyperbolic (or, rather, somewhat premature), there is a real problem in rushing artificial intelligence into the functionality of the world as the technology currently stands. While AI making goofy factual errors can be good for a laugh, but when poorly-tested (or error-prone) artificial intelligence enters critical infrastructure like healthcare, the results could be deadly.

Right now, the conversation has become polarized between the growth-hungry monsters and those who fear that artificial intelligence will get smart enough to kill them, leaving out the messy middle ground of what half-baked automation can do. Sam Altman’s pace of development was (is?) nakedly one focused on revenue-generation and the proliferation of ChatGPT — most of its revenue comes from premium subscriptions to the product — and I imagine the only way to scale this company is through forcefully putting AI in as many places as possible. What happens if a Knight Capital-style glitch happens in a healthcare system, or a payments processor, or a power grid?

People shouldn’t be afraid of an AGI — they should fear what happens when greedy executives look to automate away as much as possible. Now that OpenAI’s non-profit board has been proven to be entirely beholden to financial interests, we should be deeply concerned with the result, especially as they worry about the growing threat from companies like Anthropic and its ChatGPT competitor Claude — and The Information reports that OpenAI’s customers are already fleeing amidst all of this uncertainty.

And you’d be wrong to see this simply as a battle between startups. Anthropic is backed by both Google and Amazon (who, of course, compete with Microsoft in cloud) and Chaebol SK Telecom, part of SK Group, a 70-year-old ultra-conglomerate that owns swaths of South Korean infrastructure, including telecommunications, pharmaceuticals, gas stations, power and semiconductors.

This is a fight for the soul of the tech industry between those who want to build the future, and those who want to plunder and profit from Silicon Valley until the land cracks beneath them, devouring those unfortunate to have stuck around. This is a fight between the ultra-powerful that see another part of our society’s infrastructure that they want to control, as they have the the devices we use and the cloud services we depend on for our software. This is the future being both built and destroyed at the same time, obfuscated by a delicate and frustrating game of executive musical chairs.

And this entire fiasco has shown that the tech industry is entirely beholden to the largest and richest men in technology, and no amount of theoretical altruism or positive motives can outdo those who control the money and the cloud infrastructure of the tech industry.